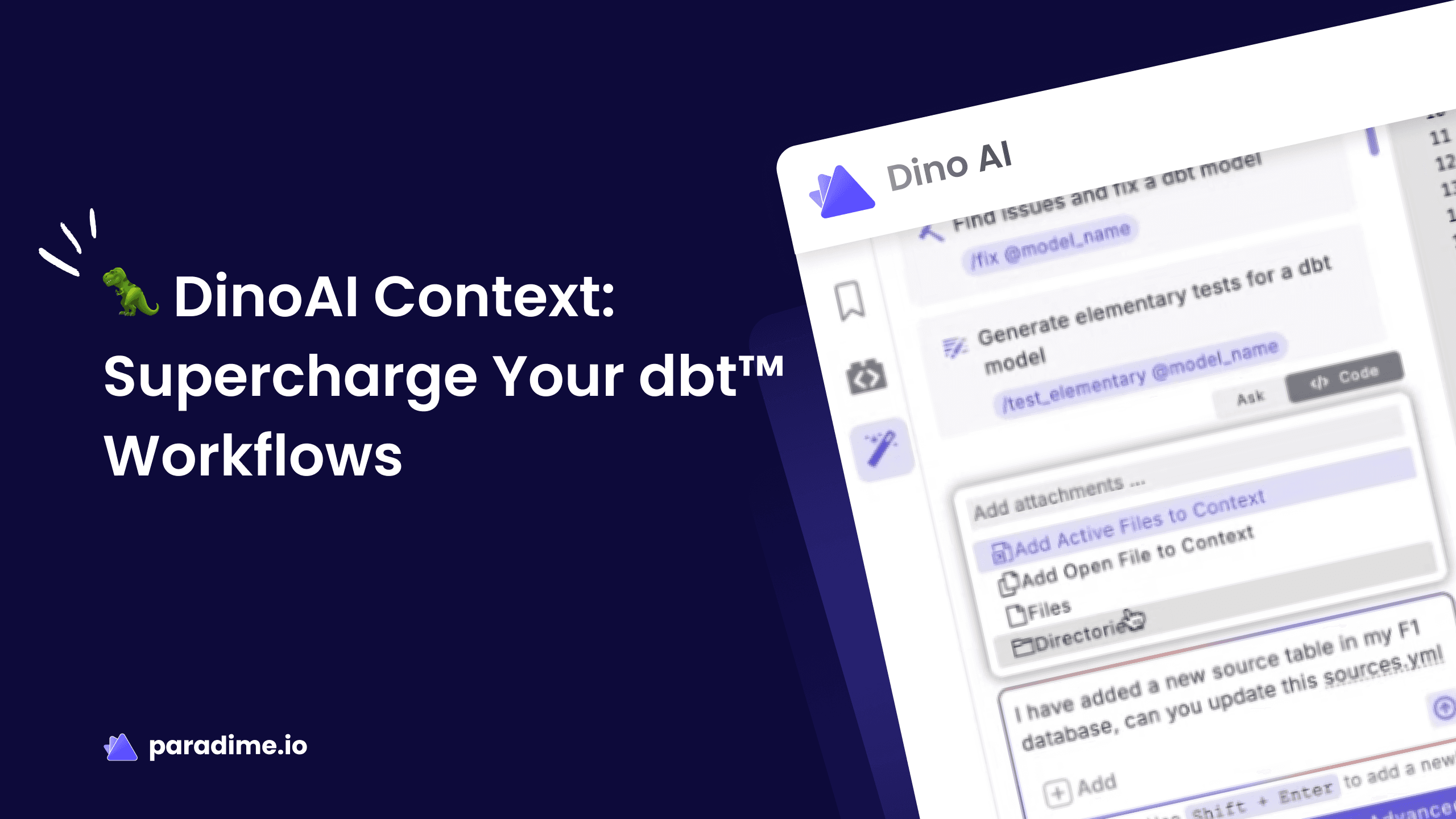

Introducing DinoAI Context: Supercharging Analytics Engineering Workflows

DinoAI's context feature is a game-changer for analytics engineers working with large dbt™ repositories. By allowing you to specify exactly which files and folders DinoAI should focus on, you get faster, more accurate results while maintaining consistent standards. Learn how this powerful feature can reduce development time from hours to seconds while improving code quality across your data pipeline.

Parker Rogers

Mar 12, 2025

·

6 minutes

min read

Last week, we introduced DinoAI v2.0, our groundbreaking "Cursor for Data" that transforms how analytics engineers work. Today, we're diving deeper into one of its most powerful features: Context.

Watch the full livestream on YouTube

The Challenge: Working with Large Repositories

Analytics engineers often work in complex dbt™ repositories with hundreds of models spread across different folders. When using AI assistants, there's a fundamental challenge: with too little context, the AI doesn't understand your project structure or standards. Conversely, looking at your entire repository is inefficient and can lead to inaccurate results.

With DinoAI's context feature, we've solved this dilemma by giving you precise control over what context the AI agent uses.

What is DinoAI Context?

Context allows you to explicitly tell DinoAI which files, folders, or parts of your project to focus on. As Fabio explained in our livestream:

"Think about it this way: you have a dbt™ repository with hundreds of models in different folders, and you want the agent to only work on a few things. You don't want it to look at the entire repository."

This targeted approach leads to higher accuracy as the AI focuses only on relevant information, faster responses without wasted time scanning unrelated files, and consistent standards by easily maintaining coding patterns across your project.

DinoAI Context in Action

During our livestream, we demonstrated how context transforms the development workflow using Formula One racing data. Here are the key use cases:

1. Updating Sources with Warehouse Context

When new tables get added to your warehouse, updating sources.yml files is tedious. We showed how DinoAI can scan your warehouse for new tables, preserve existing source definitions, and add the new tables with appropriate metadata.

What would typically take 30+ minutes took just 10 seconds.

2. Creating Standardized Staging Models

Maintaining consistent patterns is crucial in dbt™ projects. With context, you can provide an example staging model and ask DinoAI to create new models following the same pattern, ensuring consistent naming conventions (like separating words with underscores).

As Fabio demonstrated, DinoAI identified the patterns from the example file and applied them perfectly to the new model.

3. Building Business Logic with Multiple Context Sources

For more complex tasks, DinoAI can combine context from multiple files. As demonstrated, you can pass context from both pit stop and race data simultaneously, allowing DinoAI to create a marts model that joins them with appropriate calculations.

This multi-context approach allows DinoAI to understand relationships between your models without scanning your entire project.

4. Bulk Documentation Made Simple

Documentation often gets neglected because it's time-consuming. DinoAI can now take an entire directory as context, scan all models within it, and generate a comprehensive schema.yml with descriptions for every column in one go.

No more documenting one model at a time!

5. Project Configuration Aligned with Structure

Maintaining your dbt_project.yml to match your folder structure is another tedious task. DinoAI can analyze your folder structure, update configuration for materialization, schema assignments, and tags, all while creating a consistent project organization automatically.

The Power of Precision

As Kaustav noted during the livestream, this contextual approach dramatically improves output quality:

"When you narrow the focus, you increase the quality of output... It's a much more natural way to interact with the agent."

Fabio added that this mirrors how we naturally communicate:

"If I had to tell one of our engineers to update a given set of our internal pipeline, I probably wouldn't tell them, 'Hey, go and find out which one is the set of sources that maybe has the table and maybe has not.' That's just not how we work."

Coming Next Week: Dino Rules

We're not stopping here. Next week, we'll explore Dino Rules – a way to give persistent instructions to the DinoAI agent across all your prompts. This will allow you to set consistent patterns, naming conventions, and more.

Ready to Try DinoAI Context?

Current Paradime users can access this feature today. New users can start a free trial to experience how DinoAI's context feature can transform your analytics engineering workflow.

The days of tedious, manual dbt development are over. With DinoAI context, you can focus on solving business problems while the AI handles the implementation details – all while maintaining your project's standards and patterns.

Try DinoAI for free today and see the difference for yourself!